Rendering Text

I find typography fascinating. There's something magical about combining the arbitrariness of visual art with the precise metrics and systematical patterns of typeface design and text layout. I find it similar to music, where you have patterns and rulesets that bring order to the chaos of artistic expression.

I must confess that I have no formal training in typography, having picked up the main points over many years while working on various projects. At some point I even tried designing a font, but of course it turned out hideous! I was quickly disabused of the notion as the daunting amount of work became evident. (Recently I've had more success with small handmade ASCII bitmap fonts.)

I thought it would be interesting to take a look at how Lagrange renders text. The general outline is:

- TrueType font files in "resources.lgr"

- stb_truetype to rasterize individual glyphs

- glyphs stored in a big texture atlas

- measuring and drawing runs of text — glyph layout

- VisBuf for buffering visible page contents

What needs optimizing?

There are two slow tasks that need optimizing here:

- glyph rasterization

- page rendering

In both cases, we rely on the classic trade-off of spending some memory to cache the results of previous heavy work. Since we are using SDL and specifically its hardware-accelerated backend, this means both glyphs and page content need to be copied to GPU textures. (SDL can also do its drawing purely in software, but that is internal to the library and doesn't affect us.)

Glyph cache

TrueType fonts are comprised of vector forms so to draw them efficiently via SDL we must first rasterize them as bitmaps. When a specific glyph is needed, we'll just copy it from the cache texture.

The starting point is that we have a bunch of TrueType font files stored as a big binary blob inside the "resources.lgr" file. This is an uncompressed raw concatenation of all the resource files the app needs, so it's very quick to load the contents to memory. (One could even just `mmap` the file where that's supported.) At launch, all the TrueType fonts are copied to memory. This is the only copy of the font data in memory, which is meaningful because it can be tens of megabytes of data.

For rasterization and metrics I'm using stb_truetype from the wonderful public domain library collection:

I chose this library because it is public domain, has no dependencies, and can be compiled right into the app. It does have a few drawbacks, though: it's only compatible with TrueType fonts and the performance is on the slower side. Still, this makes it possible to use TrueType vector fonts on any platform, so the trade-off is worth it. In the future, at least when it comes to mobile devices, it would make sense to take advantage of native text rendering APIs, both for faster performance, wider format compatibility, and built-in bidirectional capabilities.

stb_truetype takes its sweet time rasterizing glyphs, so we'll want to process each one only once. The output format is an 8-bit monochrome bitmap, so a format conversion is required when copying the pixels to the 32-bit RGBA glyph cache texture. The RGB channels are all white; all the information is in the alpha channel. This way text color can be chosen dynamically when drawing with a simple color modulation — GPUs do that practically for free. Alpha blending is used for properly mixing contour pixels with the background color.

The cache doesn't _have_ to be 32-bit; this is mostly for wider compatibility and because SDL doesn't offer that many formats. If I used a custom shader for rendering text, the texture could have a simple R8 format, for example, and no conversion would be needed.

A subpixel interlude

The lower the display resolution, the more important it is to deal with subpixel positioning of glyphs. Variable-width fonts will not neatly fall on full pixel coordinates, especially when they are scaled to different sizes. You'll easily end up with irregular spacing between glyphs.

One solution is hinting: fonts can contain information about how the shapes should be tweaked in minor ways so they look better when rasterized onto a grid of pixels. However, stb_truetype does not support hinting, and personally I prefer to keep the glyph shapes unmodified so their appearance remains intact.

However, since we are caching rasterized glyphs, we can't just offset them to a random subpixel position (at least not without the help of custom shaders and more complex storage formats). As a compromise, Lagrange renders two variants of each glyph: one at zero offset, and one at half-pixel offset. This cuts the maximum error to half without exploding the size of the glyph cache. The text renderer is able to track glyph positioning with arbitrary accuracy, and select which glyph variant to use at any given position inside a run.

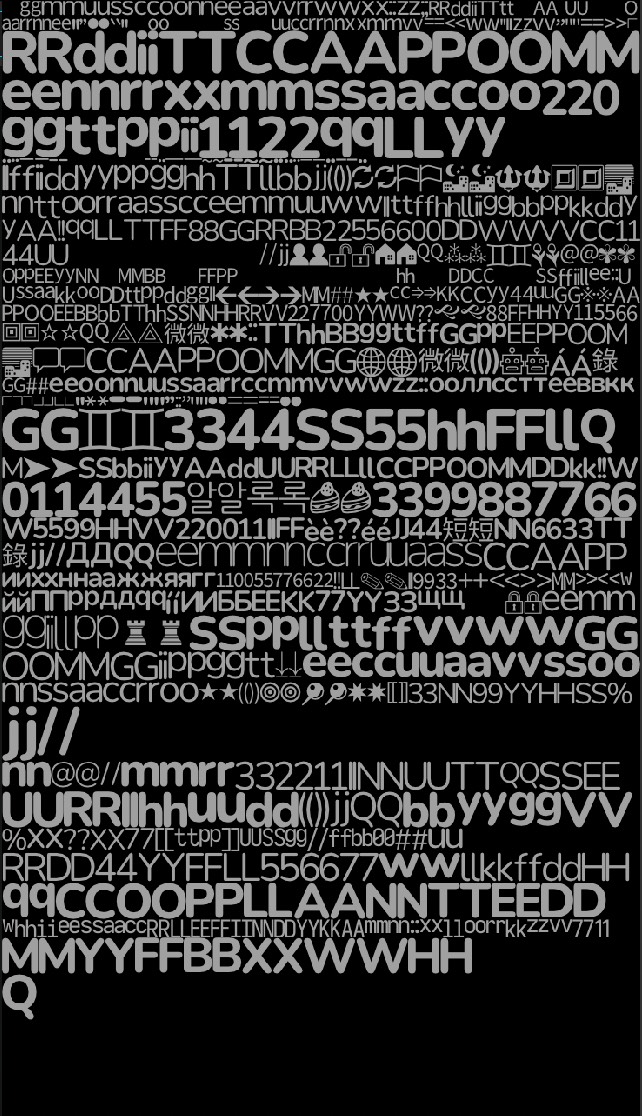

If you take a closer look at the image in the previous section, you'll note that there is indeed two slightly different copies of each glyph.

There is a catch, though, when it comes to monospace fonts where box drawing characters are used. There it is very important to make sure glyphs actually _do_ fit on the pixel grid exactly, or there can be fine subpixel gaps between the glyphs. This will not do if a continuous line is what we want, for example. Lagrange applies an additional horizontal scaling to each glyph of a monospace font, so that its width is a multiple of full pixels. When zooming in and out on a page, you will notice this as preformatted text appearing to slightly change its aspect ratio. The artifact is more pronounced on low-resolution displays.

Runs of text

There is a function called `run_Font_()` that handles a single text string: measuring, drawing, word wrapping, ANSI escapes, internal escape sequences for changing color, special Unicode codepoints, and newlines. This is a low-level function, so things like bidi should be handled higher up. There is a bunch of wrappers for `run_Font_()` for conveniently measuring dimensions, advance width, and actually drawing text with various alignment modes (left/right/centered).

I won't go deep into the details here, but during rendering `run_Font_()` basically just copies glyphs from the cache to the destination surface. This is a fairly fast operation, with the exception of kern pair lookups. Kerning is required for variable-width fonts so certain character pairings have a more natural spacing between twem. Some common pairs could be cached for quick access, but currently each pair is looked up via stb_truetype individually when iterating through the characters in a string.

There is some redundant work here since strings are typically measured before they are drawn. One should store the glyph layout during measuring and reuse it for faster drawing. This may be a good thing to do when implementing bidi layout, because that requires additional metadata about the run anyway, and there is no need to repeat the same layout calculations.

Gemtext layout

Once we receive a Gemtext document, it needs to be laid out before it can be presented on screen. This is done in GmDocument. The general idea is that the source is divided to lines, and each line is word wrapped and possibly decorated with additional runs for list bullets, link icons, and other such purely visual elements. The end result is an array of text runs that point back to snippets of text in the source document — there is only a single copy of the source document in memory.

This layout procedure may take some time if the document is long. You will notice this as a pause after resizing a tab, for instance. Eventually I may improve this by doing the layout in the background so the UI thread isn't blocked.

It's good to note that after this point the content is source format agnostic. If we had, say, a Markdown document, it could be laid out similarly and the page renderer should be able to handle it just fine. (Well, inline links would need a little tweaking when drawn.)

Buffering visible content

The final piece of the puzzle is the drawing of the text runs according to the predetermined layout. My initial implementation was to simply call `run_Font_()` on each visible run whenever the document needs to be drawn, but this is too slow on lower-end devices, and would be wasteful on mobile. What we want to do is pre-render the document into a texture, and just quickly blit it to the screen when needed. After all, the document is mostly static unchanging content so redrawing it constantly makes no sense.

The immediate problem is that we most likely can't fit the entire document in a single texture, so only a part of it can be buffered at a time.

DocumentWidget's view buffer (VisBuf) is where the actual document contents are kept for drawing onto the screen. VisBuf is composed of four half-window sized textures, so it's 2x the window size. In other words, the visible viewport at any given time is half the total buffer size.

─ ─ ─ ─ ─ ─ ─ ─ ─ ─ ─ ─

│ │

Buffer 0 Offscreen (top)

│ │

╔═══════════════════════╗

║ ║

Buffer 1 ║ Visible content ║ ▲

║ ║ │

║─ ─ ─ ─ ─ ─ ─ ─ ─ ─ ─ ─║ │ VIEWPORT

║ ║ │ freely movable

Buffer 2 ║ Visible content ║ ▼

║ ║

╚═══════════════════════╝

│ │

Buffer 3 Offscreen (bottom)

│ │

─ ─ ─ ─ ─ ─ ─ ─ ─ ─ ─ ─

The contents of each buffer texture are kept unchanged as long as possible; the only updates are addition of newly exposed text runs and dynamic updates such as link hover states.

When scrolling, ideally no new text needs to be drawn since we have offscreen content buffered as well. The four buffers in VisBuf are cycled when the viewport is moved far enough, retaining as much of the content as possible. Hence the use of half-sized textures. One could use smaller textures, too, to retain more of the viewport area but that would increase overhead of drawing lines that cross buffer boundaries: each needs drawing in both contacted buffers.

Finally, (in v1.4) buffer contents outside the viewport are filled one line at a time while the viewport remains stationary, so future scrolls can occur immediately without drawing any text. The end result is that whenever the UI needs to be refreshed, the page is already fully rendered and ready to be copied to the screen.

And there you have it! That is how Lagrange renders text.

On relative simplicity

As demonstrated here, GUIs have a lot of inherent complexity to them. I like to think of Lagrange as a simple app, but relative to what exactly? It's 63K lines of C at the moment. For me that has been a joy to work with compared to 480K lines of C++ in the Doomsday Engine, for example. Part of it is of course that C is just simpler overall compared to C++.

Typically GUI apps offload all of this to common frameworks, and for good reason: much of the functionality is indeed common, and getting things like text rendering right is difficult. The big advantage of building one's own framework is that it can be tailor-made and optimized for the application. General purpose GUI frameworks by design must support all kinds of use cases, making them heavier and more complex.

📅 2021-04-16

CC-BY-SA 4.0

The original Gemtext version of this page can be accessed with a Gemini client: gemini://skyjake.fi/gemlog/2021-04_rendering-text.gmi